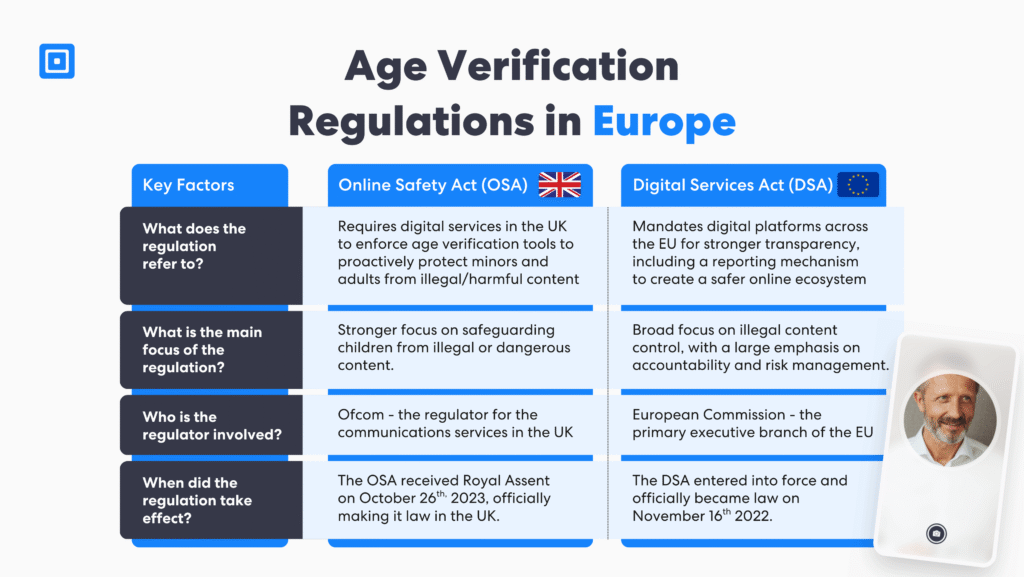

TL;DR: The UK’s Online Safety Act 2023 and EU’s Digital Services Act now pose a serious challenge to businesses with a lack of multilayered age verification checks. While digital service act and online safety act compliance aims to protect children from harmful and illicit content, they both have key differences that are crucial for businesses to maintain compliance.

How the Online Safety Act and the Digital Safety Act Protects Users

With the digital markets rapidly evolving, there comes a growing need to shield users from harmful content and illegal activities online. The Online Safety Act 2023 and the Digital Services Act are two landmark regulations designed to create new rules and a safer environment across platforms, social media, and digital services that allow users to post content online.

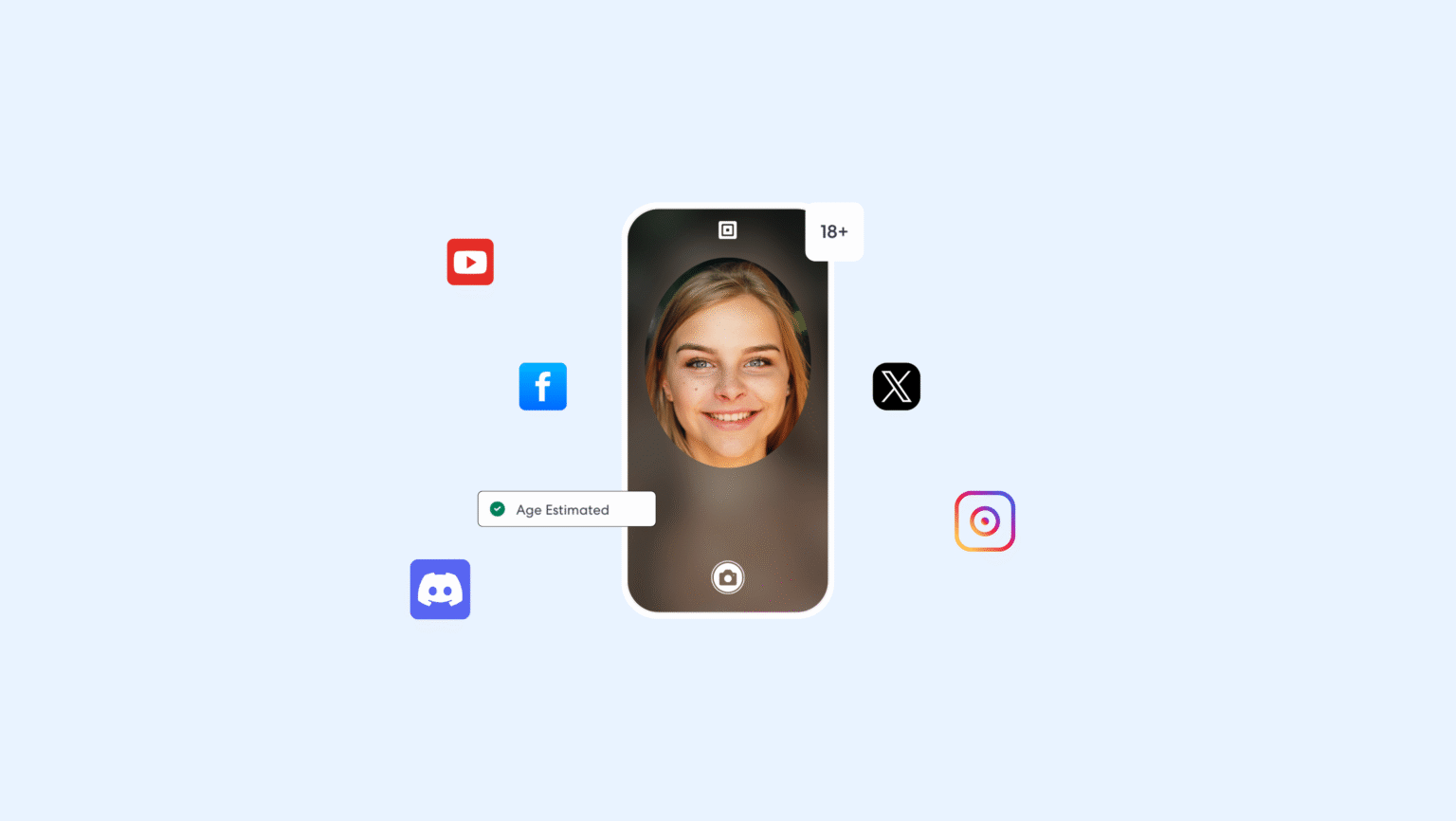

The OSA places an emphasis on safeguarding fundamental rights for children. By requiring online platforms to proactively tackle harmful activities and implementing age verification tools, online safety act compliance prioritizes removing illegal content and giving users more control over the content they encounter. Online safety act compliance also introduces criminal charges to address online abuse and dangerous stunts.

In contrast, the DSA impacts all online platforms operating within the European Union, including Very Large Online Platforms (VLOPs) and large online search engines. The DSA sets out rules for these digital platforms, requiring them to publish annual reports with online safety compliance related information. They must disclose how their algorithms operate and provide explanations for content removal decisions.

By requiring platforms to enforce stricter controls on targeted advertising and mandates for platforms to take action against illegal content, they target online platforms that showcase child sexual abuse, intimate image abuse, and the use of harmful substances. Both the OSA and the DSA require platforms to take proactive steps to protect users and prevent the spread of illegal content.

Age estimation, proofing, and layered verification are no longer optional.

Social media services and video sharing platforms, as well as forums, consumer file cloud storage, and sharing sites are impacted by these regulations. They are designed to ensure that companies act quickly to remove unsafe content such as online pornography, illegal drugs, and material encouraging self harm to provide users with more control over their online experience.

Scope and Jurisdiction of the Online Safety Act 2023 and Digital Services Act

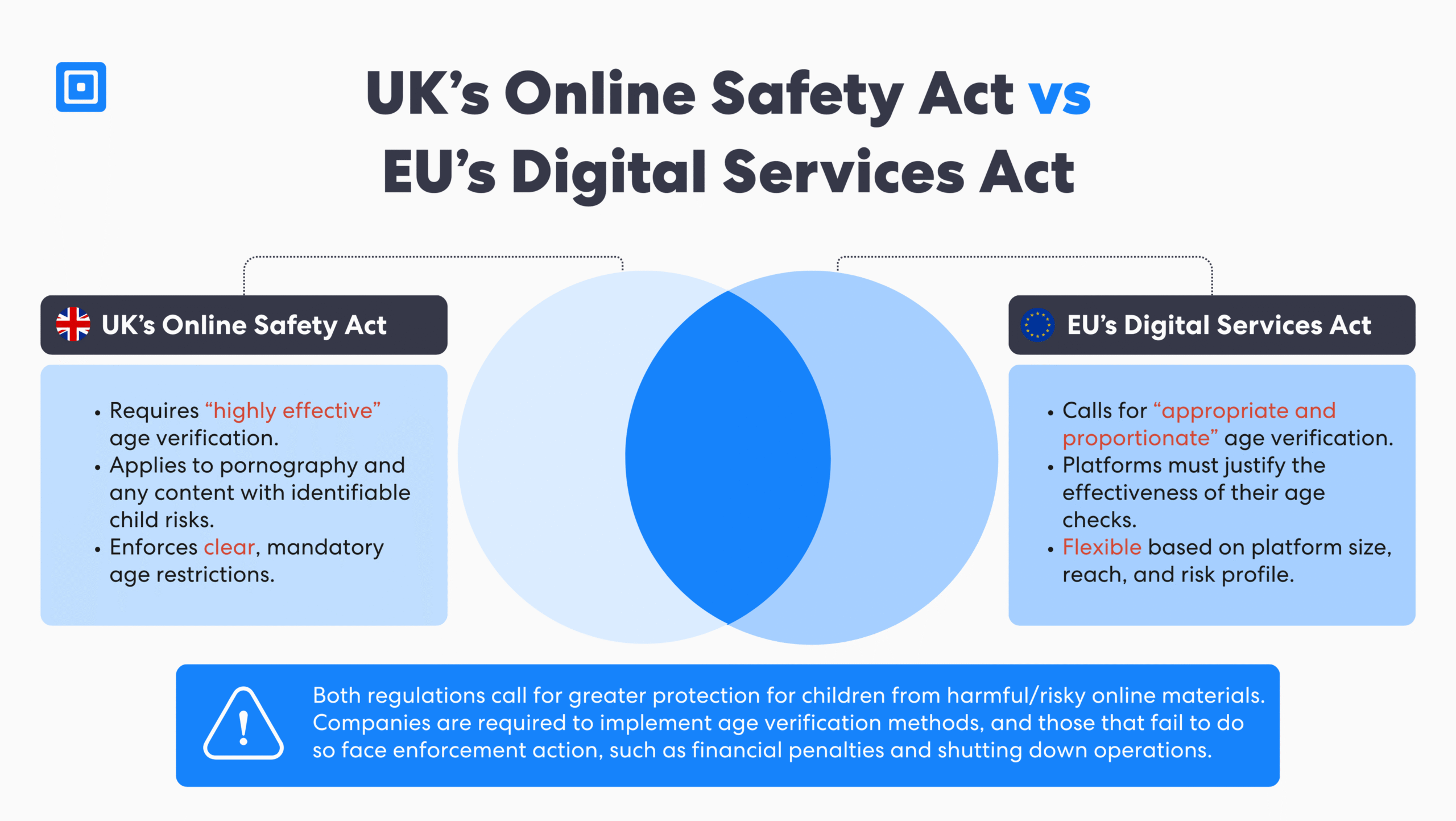

The Online Safety Act and the Digital Services Act approach regulation differently with online safety and age verification. In terms of sectoral scope, they both apply to online instant messaging services, social media companies, and online forums that enable user-generated content, regardless of the provider’s geographic location. The key factor lies in the service’s target market being users in the United Kingdom or the European Union.

Companies operating digital services may be subject to both regulatory frameworks, even if they are based outside these regions. Covered services include a significant number of search services, social media platforms, video-sharing services, file-sharing tools, online messaging and forums, dating services, search engines, and cloud hosting providers.

While the OSA enforces a UK-centric, rules-based compliance model, the DSA takes a layered, risk-based approach tailored to platform scale and function. Despite these differences, both frameworks apply to digital services that allow user content within or target the UK or EU. Therefore, compliance is required regardless of company headquarters.

Age Verification Obligations For Online Platforms with the Online Safety Act 2023 and Digital Services Act

The Online Safety Act 2023 requires platforms to implement “highly effective” age verification mechanisms, particularly for pornography and content that could harm minors. This obligation extends to legal content when it presents identifiable risks to children. The OSA mandates that platforms establish and enforce clear age restrictions to prevent underage access.

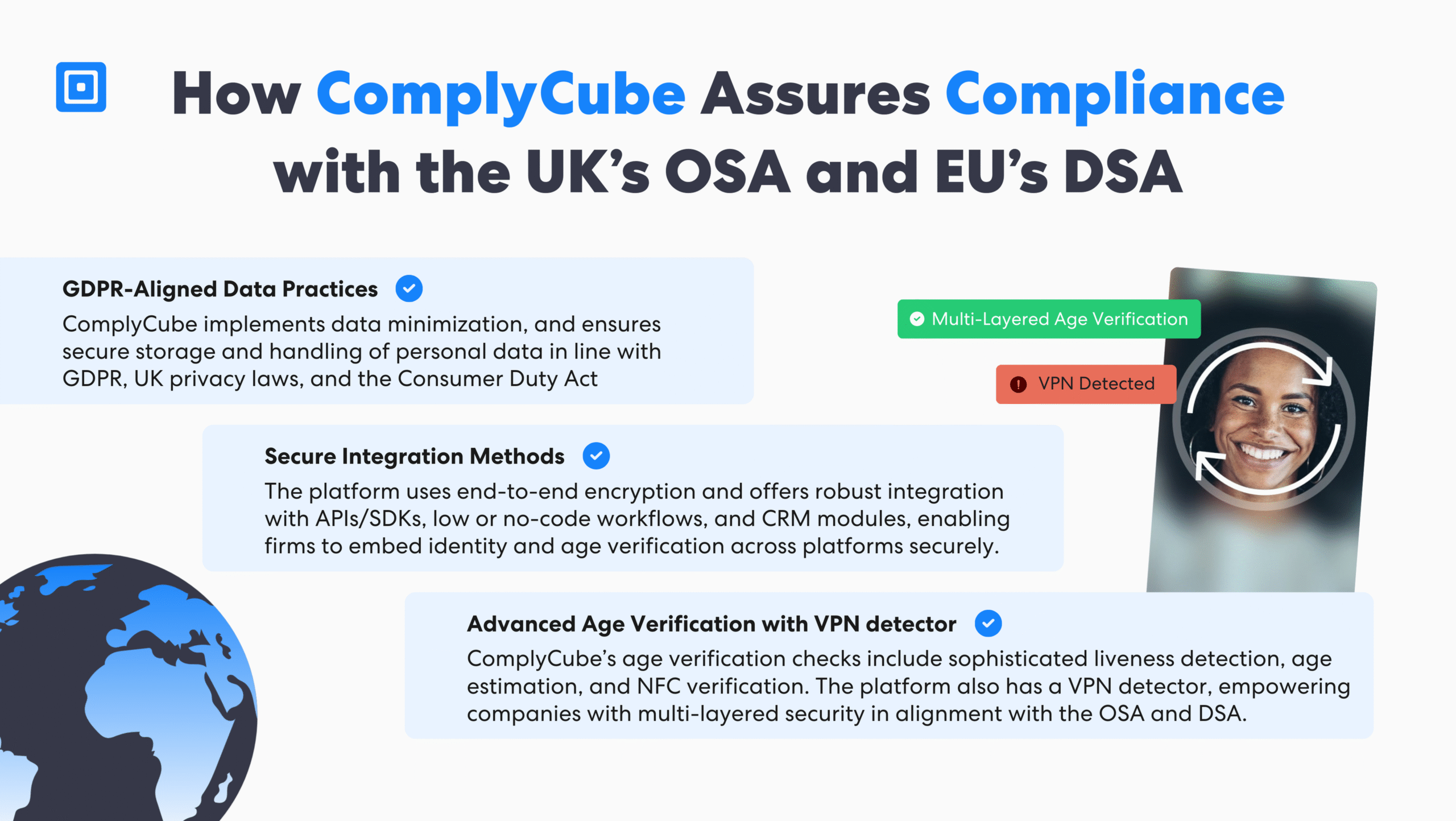

Approved verification methods include government-issued ID checks, biometric authentication, AI-powered facial age estimation, and systems resistant to circumvention tactics such as VPN use. For example, if a user tries to hide their location using a VPN, ComplyCube can detect and flag the session as part of a biometric authentication check, preventing the user from continuing until they have verified their age.

In contrast, the Digital Services Act (DSA) calls for “appropriate and proportionate” measures, offering more flexibility depending on the platform’s size, reach, and risk profile. Although less prescriptive than the OSA, the DSA still obliges platforms to demonstrate the effectiveness of their age verification processes, especially where minors may be exposed to harmful content.

Regulatory enforcement under the DSA expects a clear justification of how protective measures are applied in practice. Despite their differences, both acts share a common objective: safeguarding children online through enforceable age limits and clear accountability standards. Learn more here about identity fraud in the UK: The Price of Identity Fraud in the UK.

Transparency and Reporting Duties for the Online Safety Act 2023 and Digital Services Act

Both the Online Safety Act 2023 and the Digital Services Act require platforms to publish annual transparency reports, with the first set due by July 2025. These reports must cover takedown requests, moderation metrics, age verification methods, and broader risk mitigation efforts.

While the DSA focuses on reducing systemic risks through flexible, risk-based obligations, the OSA enforces stricter operational compliance aligned with Ofcom-approved standards. Together, the laws aim to enhance accountability and ensure child safety across digital platforms through regular, verifiable disclosures.

Illegal Content and Platform Liability for the Online Safety Act 2023 and Digital Services Act

The OSA requires platforms to proactively address illegal and harmful content, with specific illegal content duties to prevent illegal harms. Regulations include fines up to 10% of global turnover, blocking orders for persistent breaches, prioritizes child safety by reducing content around self-harm and child sexual abuse material (CSAM), and encouraging or assisting serious self-harm.

It highlights what is now classified as illegal content and a criminal offence. Enforcement actions are triggered when there is a material risk of significant harm to UK users. The DSA regulations include fines up to 6% of global turnover, requires management by national authorities with European Commission (EC) oversight, and focus on very large online platforms (VLOPs) and algorithmic accountability.

Case Study: TikTok Under DSA Enforcement (2024)

Overview

In February 2024, the European Commission opened an investigation into TikTok for suspected violations of the Digital Services Act (DSA). The inquiry targeted key areas, including child protection, advertising transparency, algorithmic data access, and risk management around potentially addictive platform design.

Particular focus was paid to the effectiveness of TikTok’s age verification systems and whether it could protect minors from dangerous content. The platform’s structure and its contribution to addictive user behavior were also investigated.

Actions and Response

The European Commission’s investigation into TikTok focused on three critical areas: child safety mechanisms, transparency in advertising practices, and the platform’s data access policies. Central to the inquiry was the algorithmic design of TikTok’s systems and their potential role in promoting harmful behaviors, particularly among minors.

Resolution

By August 2024, the European Union Commission published a press release about TikTok agreeing to remove the “TikTok Lite” rewards feature. This feature had come under scrutiny for its addictive design, which affected children’s user experience. This case underscores how DSA enforcement is not purely reactive. Regulators are now shaping platform design and safeguarding fundamental rights, especially in critical areas such as youth engagement and algorithmic risk.

Advertising and Content Profiling Controls for Children Online

The Digital Services Act (DSA) explicitly prohibits behavioural targeting of minors, including profiling-based advertising and the use of dark pattern user interfaces within content recommendation systems. These new rules aim to reduce manipulative practices that disproportionately affect children on the internet.

While the Online Safety Act 2023 does not directly govern ad targeting, it imposes new obligations to prevent children from encountering harmful commercial content, particularly on search engines. Together, these frameworks signal a tightening regulatory stance on digital platforms’ responsibility toward young users.

More Control with Technical and Ethical Implementation Standards

To comply with both the Online Safety Act 2023 and the Digital Services Act, platforms are required to minimise data collection in line with GDPR, avoid storing sensitive age-related information, and maintain transparency around their age verification processes. They must also implement safeguards against re-identification risks and use secure integration methods such as SDKs or no-code modules.

ComplyCube’s platform supports these requirements through on-device verification, biometric liveness detection, NFC chip reading, and cross-device recognition, delivering both prescriptive compliance and adaptable implementation at scale.

Governance, Risk Mitigation, and Senior Accountability

Under both the Online Safety Act 2023 and the Digital Services Act, platforms are obligated to perform regular risk assessments and actively mitigate any material risks to users, particularly children. These assessments must address exposure to content such as pornography, self-harm, or eating disorders, as well as systemic abuse risks arising from user-generated content.

Additionally, platforms must evaluate the impact of their advertising and recommendation systems on minors. The OSA requires the appointment of compliance officers and the maintenance of audit-ready records, while the DSA mandates robust content governance policies and internal audits for Very Large Online Platforms (VLOPs).

How OSA and DSA Impact SMEs and Niche Platforms

There is a common misconception that smaller platforms are exempt from regulatory obligations. In fact, the Online Safety Act 2023 applies based on the nature of content risk and accessibility, not the size of the platform. While the Digital Services Act provides procedural relief for small and medium-sized enterprises (SMEs) with fewer than 50 employees or under €10 million in annual turnover, it does not waive core responsibilities. All platforms, regardless of scale, are required to implement proportionate safety measures if their services are likely to be accessed by children.

Integration with Other New Data Protection Rules

Age verification requirements intersect with several key regulatory frameworks. Under the General Data Protection Regulation (GDPR), platforms must ensure data minimisation, establish a lawful basis for processing, and apply privacy-by-design principles. The eIDAS regulation sets digital identity assurance standards, particularly relevant for EU-based verifications.

Additionally, the Digital Markets Act places design scrutiny on gatekeeper platforms, including age-related considerations. Secondary legislation under the OSA and DSA provides the detailed thresholds and classifications necessary to operationalise these obligations for different service providers.

Ofcom Investigates 34 Pornography Websites for Failing Age Verification

Investigation of Primary Priority Content

On 31 July 2025, Ofcom launched formal investigations into four digital service providers operating a combined 34 pornographic websites. These platforms were suspected of breaching OSA requirements by failing to implement the new rules. Ofcom’s scrutiny focused on whether the platforms had adopted age assurance systems that met the legally required “highly effective” standard.

Potential Penalties for Non-Compliance

The outcome of these probes could set critical precedents for how similar platforms are regulated moving forward. These sanctions can reach up to £18 million or 10% of a platform’s qualifying worldwide revenue, whichever is higher. In extreme cases, Ofcom can also direct internet service providers to block access to offending platforms within the UK.

Outcomes & Impacts

While final rulings from the ongoing investigations are still pending, early data suggest the OSA’s enforcement is already having a measurable impact. Major adult content platforms have experienced substantial traffic declines with one report indicating a 47% drop in UK visits following the new rule’s implementation. This sharp decline signals that age verification systems are actively being adopted, either voluntarily or under regulatory pressure.

Adopting a Unified Compliance Strategy

To comply with both the OSA and DSA, firms should adopt a holistic compliance strategy. Businesses must invest in dynamic risk management solutions that can trigger automated workflows according to user geolocation or document types.

Streamlining the testing and iteration cycles becomes more efficient when partnering with an age verification vendor with SDKs and APIs for seamless integration. Additionally, monitoring evolving codes of practice can further equip organizations to be on top of changing regulations. You can learn more here: What are Identity Verification Solutions?

Key Takeaways

- The Online Safety Act mandates stringent age checks and safety protocols to safeguard children.

- The EU Digital Services Act applies a proportionate, risk-based approach tailored by platform.

- Both the UK’s OSA and the EU’s DSA places greater ownership on firms to regulate content.

- Smaller services, including startups, are not exempt from scrutiny under the OSA and DSA.

- Platforms must adopt ethical age assurance that acknowledge fundamental rights, privacy-preserving design, and unified compliance tools.

Strengthen Compliance with Age Verification Regulations Worldwide

The convergence of the Online Safety Act 2023 and Digital Service Act reflects a shift in digital responsibility. Platforms need to move beyond reactive moderation and adopt safety-by-design principles, especially for children. Those companies providing open, user-first safety practices will earn trust and regulatory goodwill in a changing compliance landscape.

Explore seamless and bespoke multi-layered age assurance solutions on the ComplyCube platform. Onboard customers in seconds and avoid facing hefty enforcement action from the Online Safety Act and Digital Services Act. Speak with a member of the ComplyCube team today.

Frequently Asked Questions

Do the OSA and DSA apply to platforms with mostly adult audiences?

While a platform may target adults, it must still comply if minors can access it, particularly in cases like social media, where content is shared across various age groups. Platforms are required to take proportionate steps, such as implementing content filters and parental controls, to prevent minors from being exposed to harmful or adult content.

What counts as primary priority content under the Online Safety Act (OSA)?

Primary priority content includes pornography, as well as material that promotes eating disorders, self-harm, or suicide. This applies even if the content is legal, as it is deemed harmful to children.

How does OSA or DSA impact age verification on social media platforms?

Under the OSA, age verification is mandatory if children are likely to access the platform. The EU DSA also requires age assurance when content poses risks, meaning most mainstream social media platforms must adopt age verification measures.

Can companies or executives face criminal penalties under these acts?

The OSA allows for senior managers to face criminal liability if platforms fail to comply with safety and age verification duties. The DSA also permits penalties, including criminal consequences, depending on how individual EU member states implement the regulation.

How do the OSA and DSA protect fundamental rights while enforcing compliance?

Both acts include safeguards to balance regulation with user rights. They require platforms to respect freedom of expression and privacy while applying proportionate age verification, content moderation, and reporting obligations.