TL;DR: Deepfake detection tools are essential for FinTechs to effectively stop synthetic identity fraud by identifying manipulated media in digital onboarding flows. Safeguarding from deepfake threats is crucial for maintaining integrity. Without a doubt, deepfake detection tools 2026 and beyond are evolving but what are the limitations of current deepfake detection tools?

What Are Deepfake Detection Tools and How Do They Work?

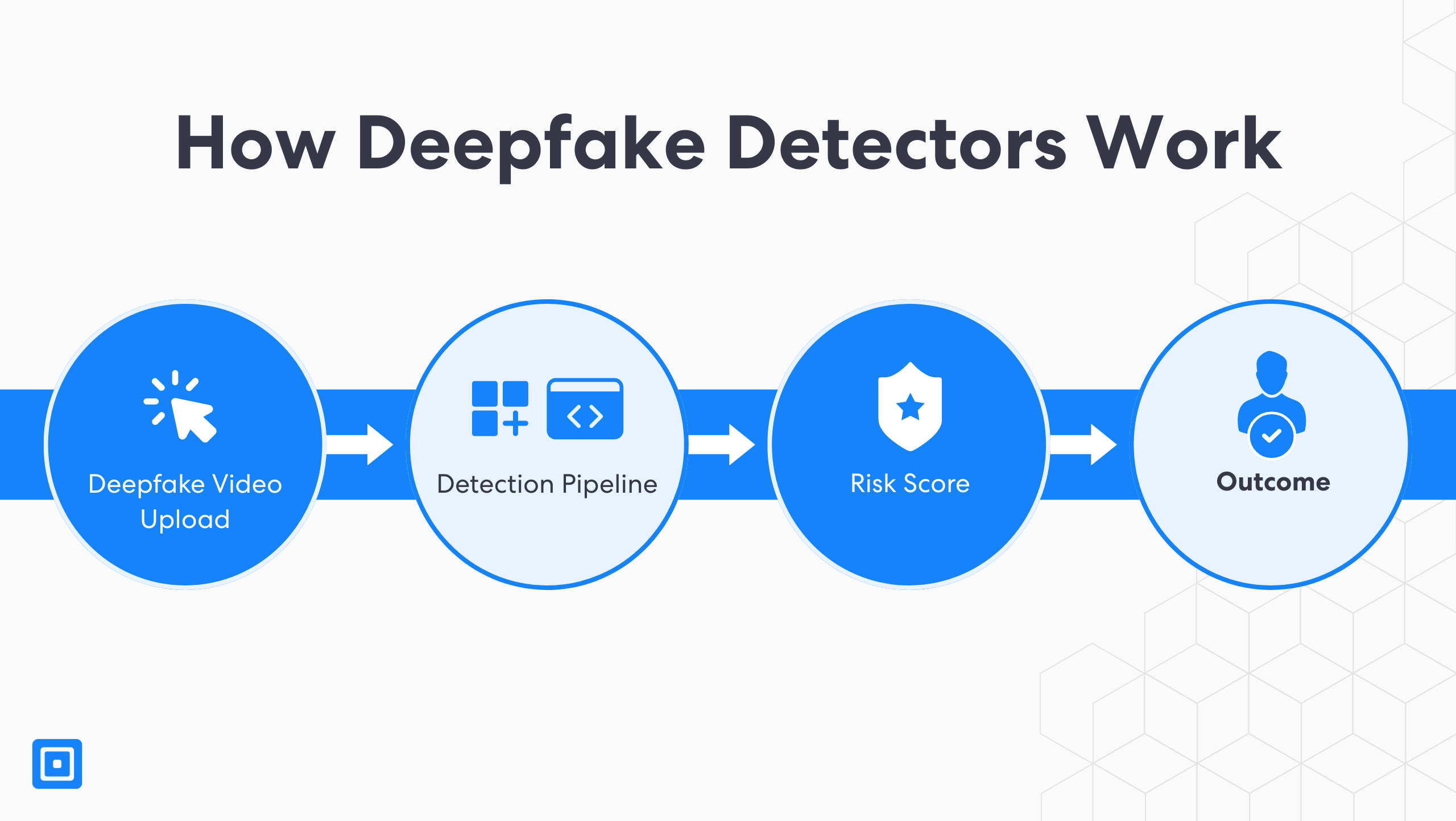

Deepfake detection software and tools are designed to identify and analyze manipulated or synthetic media. Examples include videos, images, and audio generated using artificial intelligence (AI). These are used to impersonate legitimate users. It causes significant financial and reputational damage. Such systems use a combination of AI tools, computer vision, and pattern recognition to distinguish authentic content from fake.

Yet, many deepfake detectors produce scores that indicate the likelihood that a piece of media has been manipulated by bad actors. Some tools use a binary classification system to decipher image integrity, providing clear outcomes for decision-making. This allows risk engines to make nuanced decision such as granting access, requiring step‑up verification, or flagging for manual review.

Under the hood, modern systems compare input against extensive datasets of known real and synthetic samples. As deepfake creators use increasingly sophisticated generative models, detection models must continually evolve. They must spot subtle inconsistencies that humans cannot see. Advanced detection models with faster analysis look at biological signals, such as subtle changes in blood flow, to distinguish real from synthetic media.

Frame level classification is also used to analyze individual video frames for signs of manipulation. Deepfake detection tools leverage machine learning, computer vision, and biometric analysis to identify AI generated deception. For example, some solutions use computer vision to detect micro-variations in skin color caused by blood flow, which are largely absent in synthetic media.

Identity Risk in Digital Finance

FinTechs operate in a digital world where customers rarely appear in person. This convenience, however, has created new opportunities for fraud, particularly through synthetic identities constructed from stolen and fabricated data. Deepfake technology allows bad actors to create convincing yet fraudulent images, videos, and voice recordings that mimic real individuals.

Besides, these forms of manipulation can bypass traditional identity verification systems. It can cause significant financial loss and compliance risk for FinTechs. Deepfakes are increasingly used in phishing attacks and Business Email Compromise (BEC) scams, where attackers impersonate executives to authorize fraudulent transactions.

In this environment, deepfake detection tools are no longer optional. These systems analyze digital media for manipulation and help ensure that the person on the other end of a transaction or onboarding flow is real, authentic, and authorized. FinTechs must protect organizations from identity fraud and reputational harm, especially as 46% of businesses have been targeted by deepfake-fueled identity fraud.

Why Deepfake Detection Tools Matter for FinTechs

FinTech platforms process millions of remote identity checks each year for account openings, lending, payments, and compliance. In this context, fraud attempts often involve synthetic identity fraud, where attackers blend stolen personal information with AI‑generated media. Deepfake content and digital manipulation are becoming increasingly sophisticated, making it harder for traditional systems to detect fraud.

Now, deepfake video is a major point of vulnerability especially during remote onboarding. Fraudsters can submit a manipulated video that appears to match the claimed identity and fool basic selfie verification systems. Without robust detection, these attacks lead to unauthorized access, financial loss, and regulatory scrutiny.

Deepfake detection tools work in tandem with biometric analysis (e.g., liveness detection, facial recognition) and document authentication. This layered approach helps separate legitimate applicants from fraudulent ones and strengthens trust in digital onboarding flows. Real-time deepfake detection can flag synthetic voices and videos in digital content, helping to prevent financial harm from deepfake-enabled cybercrime.

How Deepfake Detectors Fit Into FinTech Verification

The seamless integration of deepfake detection software into Know Your Customer (KYC) and Anti-Money Laundering (AML) workflows through API access and deployment. When a user uploads a video during onboarding, the system initiates a detection pipeline that focuses on detecting deepfakes in real time, assessing facial movements, texture anomalies, blink patterns, and frame transitions to identify signs of deepfake content.

Then, the system generates a risk score for digital content, which reflects the chance that media has been artificially manipulated. This score feeds into existing decision engines, triggering automated outcomes, such as approval, rejection, or step-up verification. Beyond visual cues, these key features of tools also evaluate metadata and behavioral signals such as device fingerprinting or user interaction patterns. This layered scrutiny strengthens the integrity of digital onboarding and helps fintech stay ahead of synthetic identity threats.

In high-volume environments, real-time deepfake detection is essential. Unlike legacy systems such as government agencies that may struggle with live video or HD content, modern solutions for fintechs are designed for performance and scalability, ensuring fast, accurate results across web and mobile channels. Key capabilities include media integrity checks, low-latency analysis, and full integration with enterprise risk and compliance infrastructure.

Detection Accuracy of Deepfake Creators

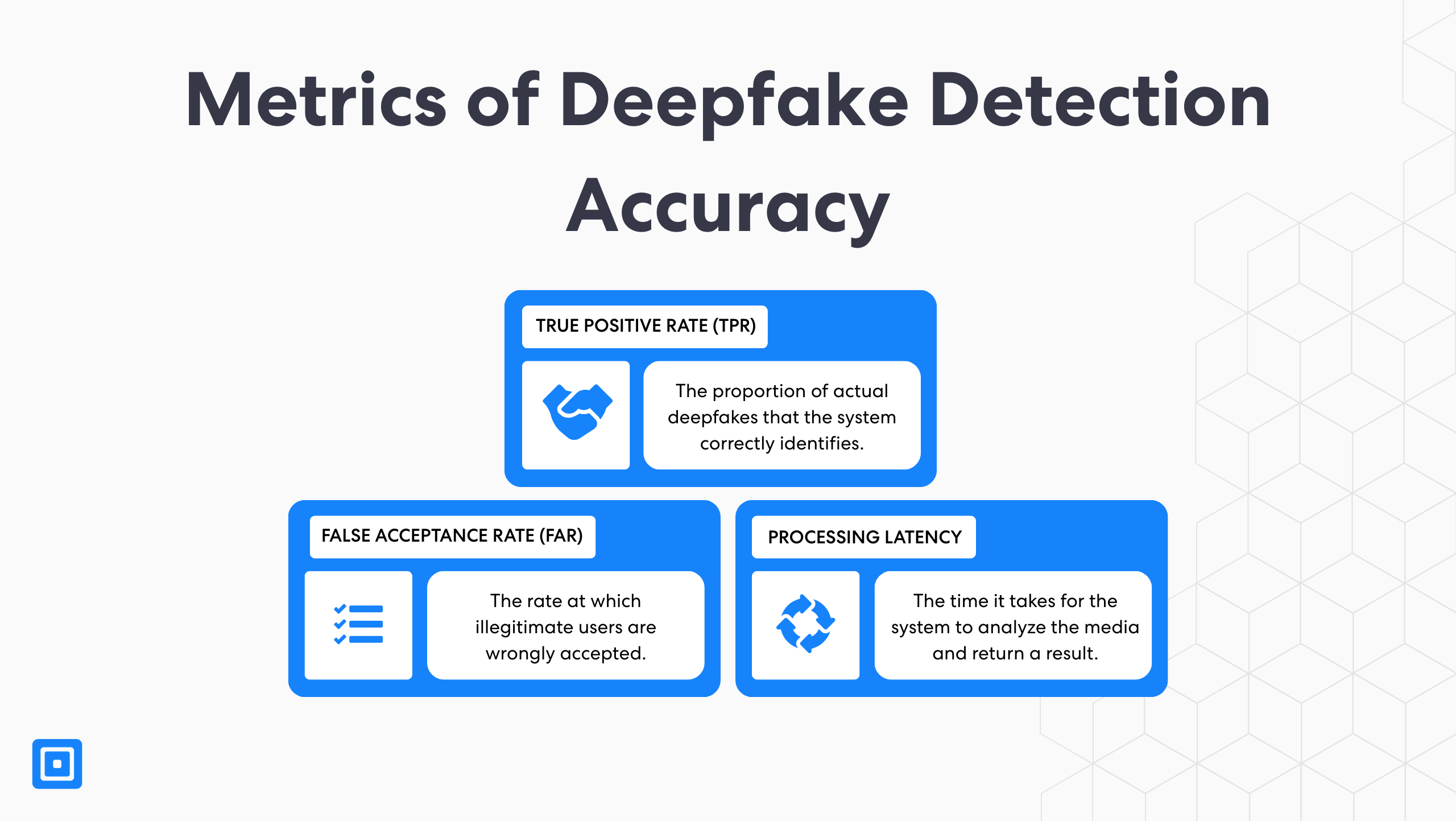

Detection accuracy is a core metric for evaluating deepfake detection solutions. It hinges on three key indicators: True Positive Rate (TPR), False Acceptance Rate (FAR), and Processing Latency. TPR measures the proportion of actual deepfakes that the system correctly identifies. FAR, on the other hand, represents the rate at which a biometric system mistakenly grants access to an unauthorized person. Lastly, processing latency refers to the time it takes for the system to analyze the media and return a result.

To stay ahead, FinTechs should test detection tools across varied conditions, including different lighting, backgrounds, devices, and demographic groups to ensure fair and reliable performance. Video resolution and compression levels significantly impact real-time detection accuracy. Nevertheless, teams should evaluate tools across a range of video qualities and compression settings to confirm their robustness in real-world scenarios.

Therefore, detection performance often varies across different skin tones and demographic groups. Demographic and cultural biases in training data can influence the effectiveness of deepfake detection tools, leading to lower accuracy for certain users and reducing overall reliability. FinTechs should prioritize solutions that demonstrate strong performance across diverse real-world conditions.

Commercial Deepfake Detection Tools and the Marketplace

Often, commercial tools offer enterprise‑grade solutions with scalability, high detection accuracy, and integration options. They often outperform open‑source models in terms of support, reliability, and ongoing updates. Open source tools provide greater transparency and customization at the cost of lower detection capabilities and higher maintenance requirements.

Deepfake detectors built for financial services handle high volumes, report confidence scores, and integrate seamlessly with risk engines and case management systems. Open source detection algorithms, when combined with hybrid approaches and commercial support, strike a balance between transparency and professional reliability. As a result, these tools provide thorough, scalable solutions for organizations managing complex identity verification workflows.

It’s important to set realistic expectations. Even top commercial tools may see real‑world accuracy drop relative to controlled test environments. Detection accuracy depends on the quality and diversity of training data, as limited or non-representative datasets create vulnerabilities. Organizations should establish continuous improvement processes with regular audits and updates to ensure detection remain effective. Effective deployment relies on combining detection tools with broader risk signals and operational controls.

What Are The Current Limitations of Deepfake Detection Tools?

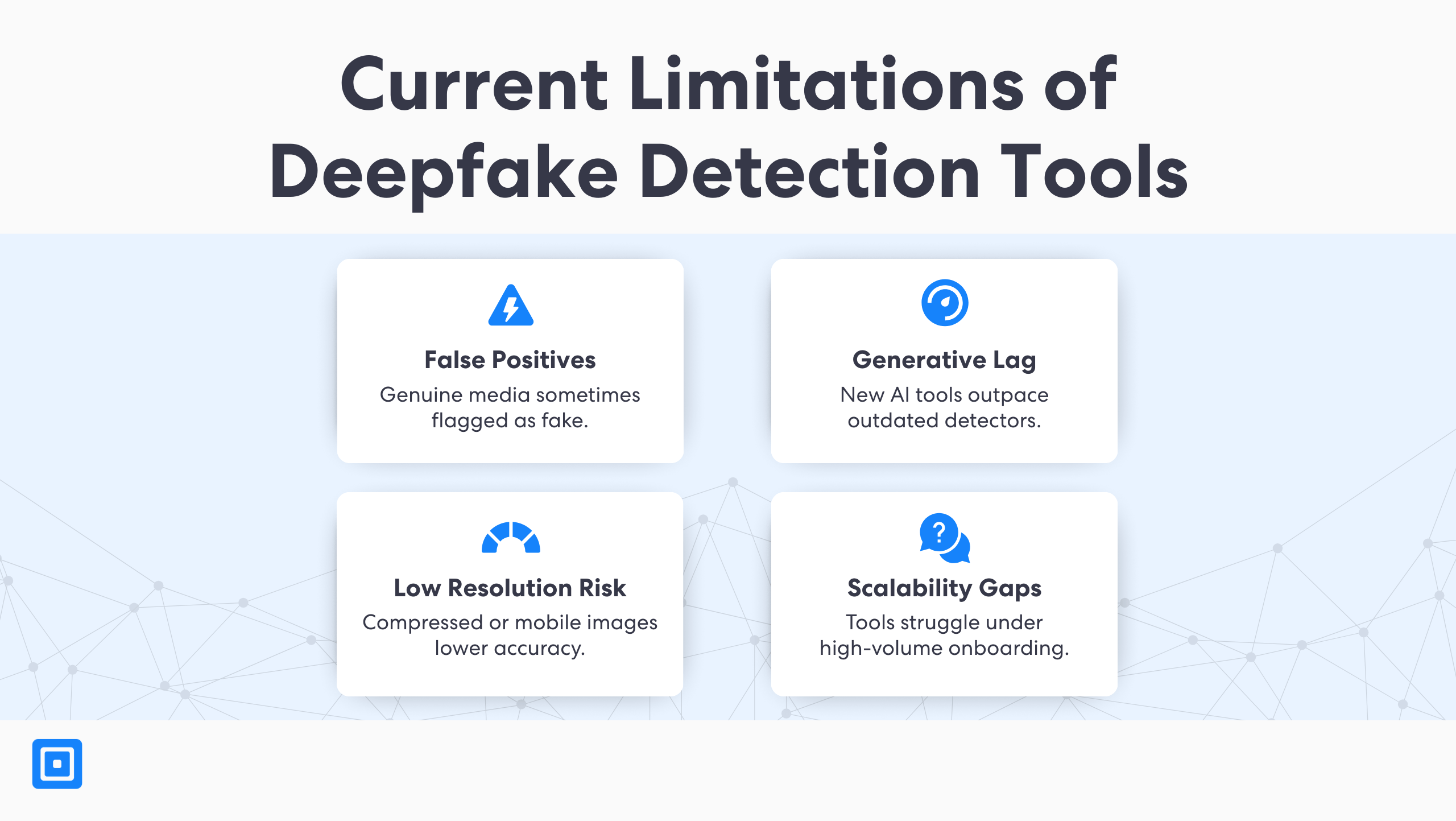

However, despite recent advancements, current limitations in detecting deepfakes stay significant. Detection models struggle with low-quality or highly compressed media, which can obscure visual cues essential for accurate analysis. They may fail to identify high-quality deepfakes generated using advanced methods. Additionally, some systems lag behind the latest developments in generative AI. Even when genuine, certain tools can misclassify content resulting in false positives that can disrupt onboarding workflows.

In addition, many deepfake detection tools also struggle with real-time analysis. Particularly, this occurs during live video calls, where they cannot pause to examine inconsistencies. FinTechs therefore need fallback options, such as manual review or tiered verification, when confidence scores fall into ambiguous ranges. Audit trails and explainable scoring are also important for compliance and regulatory reporting.

The Role of AI Deepfake Detection Tools in Ongoing Defense

AI tools power both deepfake creation and detection. The deepfake detection landscape is rapidly evolving. With new tools and techniques constantly emerging, it is essential for organizations and government agencies to stay ahead of new threats. As adversarial generative AI creates more realistic fraud attempts, fintechs must rely on improved machine learning systems that adapt quickly. Learn more about AI-generated deepfake detection here: Deepfake Detection For The Modern Media Threat.

Staying ahead of generative AI threats means deploying systems that don’t just detect deepfakes today, but learn from every new attempt tomorrow

According to Harry Varatharasan, Chief Product Officer of ComplyCube, “Staying ahead of generative AI threats means deploying systems that don’t just detect deepfakes today, but learn from every new attempt tomorrow. ComplyCube’s approach focuses on continuous model retraining and intelligence-sharing to keep defenses adaptive and responsive.”

Leading detection platforms incorporate continuous retraining, adversarial learning, and federated updates from cross‑industry threat intelligence. Automated alerts and real time monitoring are essential for detecting and responding to threats as they emerge. These capabilities help maintain detection relevance and improve defenses against evolving manipulation techniques. Threat actors are increasingly using generative AI deepfakes to manipulate public perception and enhance their attacks.

Detecting Deepfakes in Live Flows

Real‑time deepfake detection is now a business necessity for digital onboarding. Advanced AI tools monitor facial movements, expression dynamics, and temporal cues to distinguish genuine users from manipulated submissions. Not to mention, multimodal detection, which analyzes audio, visual, and metadata streams, further improves accuracy in live flows.

As a matter of fact, integrating deepfake analysis into workflows with live video calls or instantaneous selfie checks is crucial. It supports seamless operations without degrading user experience. Behavioral analysis complements automated detection by helping security teams interpret ambiguous results and identify suspicious activities. When combined with document verification and device intelligence, deepfake detection enhances onboarding security holistically.

Case study: Hong Kong Neobank Expansion and Deepfake Videos

Deepfake video fraud and falsified identity verification

In mid-2025, a Hong Kong–based digital bank experienced a coordinated fraud campaign during a regional expansion push. Fraudsters used AI-generated deepfake videos to submit false identity verification media. Undoubtedly, they mimicked facial movements and speech.

Multi-layered fraud prevention with deepfake detection tools

As a result, the bank integrated a multi-layered fraud prevention system, anchored by a specialized deepfake detection engine. The team implemented behavioral analytics to flag irregular user-device interactions to block reused access points linked to prior fraud.

Integrating deepfake detection into digital onboarding flows

- Over 50 fraudulent account applications blocked in the first month after deployment

- 90% of flagged cases verified as attempted synthetic identity fraud

- Automated decisioning time improved by 35%, reducing onboarding delays

Compliance and Regulatory Implications

Regulatory expectations under frameworks such as the Financial Action Task Force (FATF) recommendations and EU directives such as AMLD6 require fintechs to implement robust identity verification and risk assessment systems. As synthetic identity fraud evolves, meeting these standards increasingly depends on the ability to detect and reject manipulated biometric and media inputs.

However, deepfake detection tools 2026 and beyond, play a critical role in fulfilling these obligations. To enhance the reliability of KYC processes and strengthening internal controls, firms must verify the authenticity of user-submitted content. Well‑validated systems also improve audit readiness, offering clear evidence of compliance to regulators.

Business Impact of Deepfake Detection Tools

Investing in deepfake technology delivers strong ROI by reducing fraud losses, minimizing manual reviews, and increasing operational efficiency. Above all, when integrated directly into onboarding flows, these deepfake detection tools also lower customer drop-off rates and improve overall conversion.

Yet, beyond immediate cost savings, comprehensive detection systems reinforce long-term trust. It enhances brand reputation, especially in markets with heightened digital fraud risk. By protecting the authenticity of user-submitted media, they help safeguard digital ecosystems and uphold media credibility in the face of increasingly realistic manipulated content.

Key Takeaways

- Deepfake detection tools are critical to defend against synthetic identity fraud.

- Detection accuracy directly impacts risk and user experience.

- Commercial tools provide scalable, enterprise‑ready solutions for FinTechs.

- Understanding the limitations of current deepfake detection tools shape layered defenses.

- Deepfake detection tools continue to evolve in 2026, requiring adaptive, multi‑signal strategies.

Why FinTechs Should Partner with ComplyCube

In conclusion, ComplyCube delivers trusted, scalable fraud prevention with deepfake detection at its core. Its API-first platform supports advanced biometric checks, regulatory compliance, and seamless onboarding. Whether launching in a new market or tightening controls, ComplyCube enables FinTechs to operate with confidence. Speak to a ComplyCube team member to explore tailored solutions for deepfake detection and synthetic fraud risk mitigation.

Frequently Asked Questions

What are deepfake detection tools used for FinTech?

Deepfake detection tools 2026 and beyond identify manipulated media in user-submitted documents, selfies, and videos. It helps prevent fraud during onboarding and KYC processes. Deepfake detection tools are also used to identify synthetic media and support digital forensics in verifying the authenticity of user-submitted content. This is crucial for combating misinformation and safeguarding public trust.

How accurate are commercial deepfake detection tools today?

Leading tools achieve over 90% accuracy in lab environments and support real-time analysis. Key features of top deepfake detection tools include multi-layered detection, forensic reporting with confidence scores and heatmaps, and support for audio deepfake detection to identify AI generated voices and voice cloning.

What are the limitations of current deepfake detection tools?

Current deepfake detection tools may struggle with poor video quality or new generative techniques. Background noise and compression algorithms used by social media platforms can further degrade detection accuracy. However, false positives are possible, making multi-layered risk checks essential. Detection tools that use biological signal detection methods tend to show better performance in real-world scenarios compared to those relying solely on visual artifacts. Many deepfake detection tools also struggle with real-time analysis, especially during live video calls, where they cannot pause to examine inconsistencies.

Is deepfake detection mandatory for regulatory compliance?

While real-time deepfake detection is not explicitly mandated by most regulatory frameworks, it plays a critical role in meeting broader compliance expectations. Regulatory bodies such as the Financial Action Task Force (FATF) and the EU under eIDAS emphasize the need for thorough identity verification, media authenticity, and risk-based controls. Deepfake detection aligns with these principles by helping verify the integrity of biometric and video inputs, reduce impersonation risks, and strengthen digital onboarding. For FinTechs operating in high-risk or cross-border environments, implementing such tools demonstrates proactive compliance and enhances audit readiness.

How can ComplyCube support deepfake detection in FinTech?

ComplyCube integrates detection into its identity verification suite, combining it with liveness checks, document validation, and PEP/sanctions screening to offer end-to-end fraud defense. The platform supports forensic analysis and voice cloning detection as part of its comprehensive fraud defense suite, helping to examine and verify the authenticity of digital media and audio. The importance of digital content authenticity is paramount, and these tools help detect signs of manipulation, including AI-generated images. As of 2026, effective deepfake detection tools now analyze invisible biological signals and complex cross-media patterns to expose synthetic media. Deepfake technology are also used to detect and prevent BEC attacks for everyone, from government agencies to fintechs.